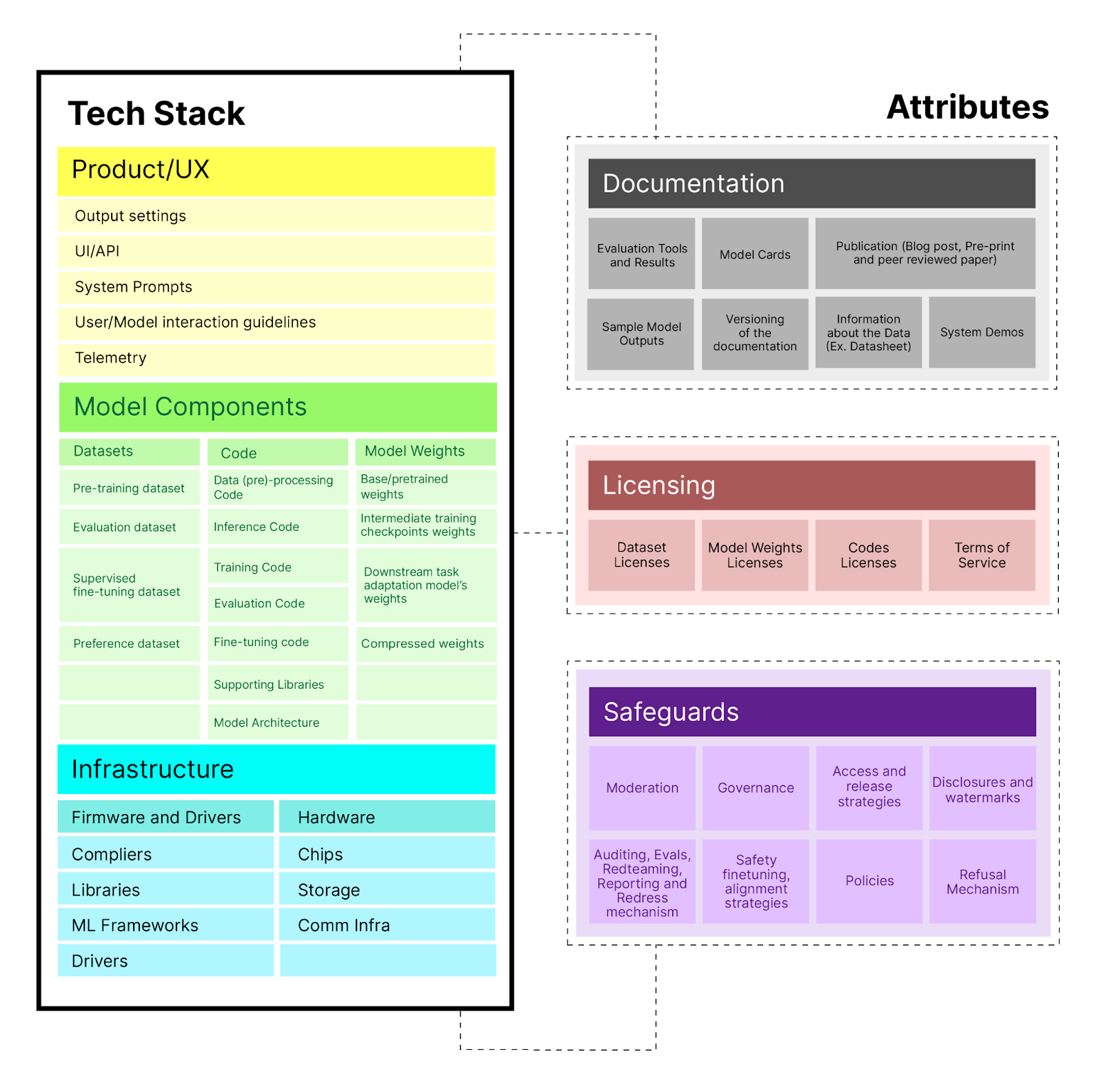

| Rank | Model | weights | data | open source license | Organization |

|---|---|---|---|---|---|

| 1 | K2-65B | ✅ | ✅ | ✅ | llm360 |

| 2 | olmo | ✅ | ✅ | ✅ | AllenAI (AI2) |

| 3 | qwen2.5 32b | ✅ | ❌ | ✅ | Alibaba |

| 4 | Grin-MOE | ✅ | ❌ | ✅ | Microsoft |

| 5 | phi 3.5 | ✅ | ❌ | ✅ | Microsoft |

| 6 | yi 1.5 | ✅ | ❌ | ✅ | 01.ai |

| 7 | mixtral8x22b | ✅ | ❌ | ✅ | Mistral |

| 8 | qwen2.5 72b | ✅ | ❌ | ❌ | Alibaba |

| 9 | llama 3.1 | ✅ | ❌ | ❌ | Meta |

| 10 | deepseek 2.5 | ✅ | ❌ | ❌ | Deepseek |

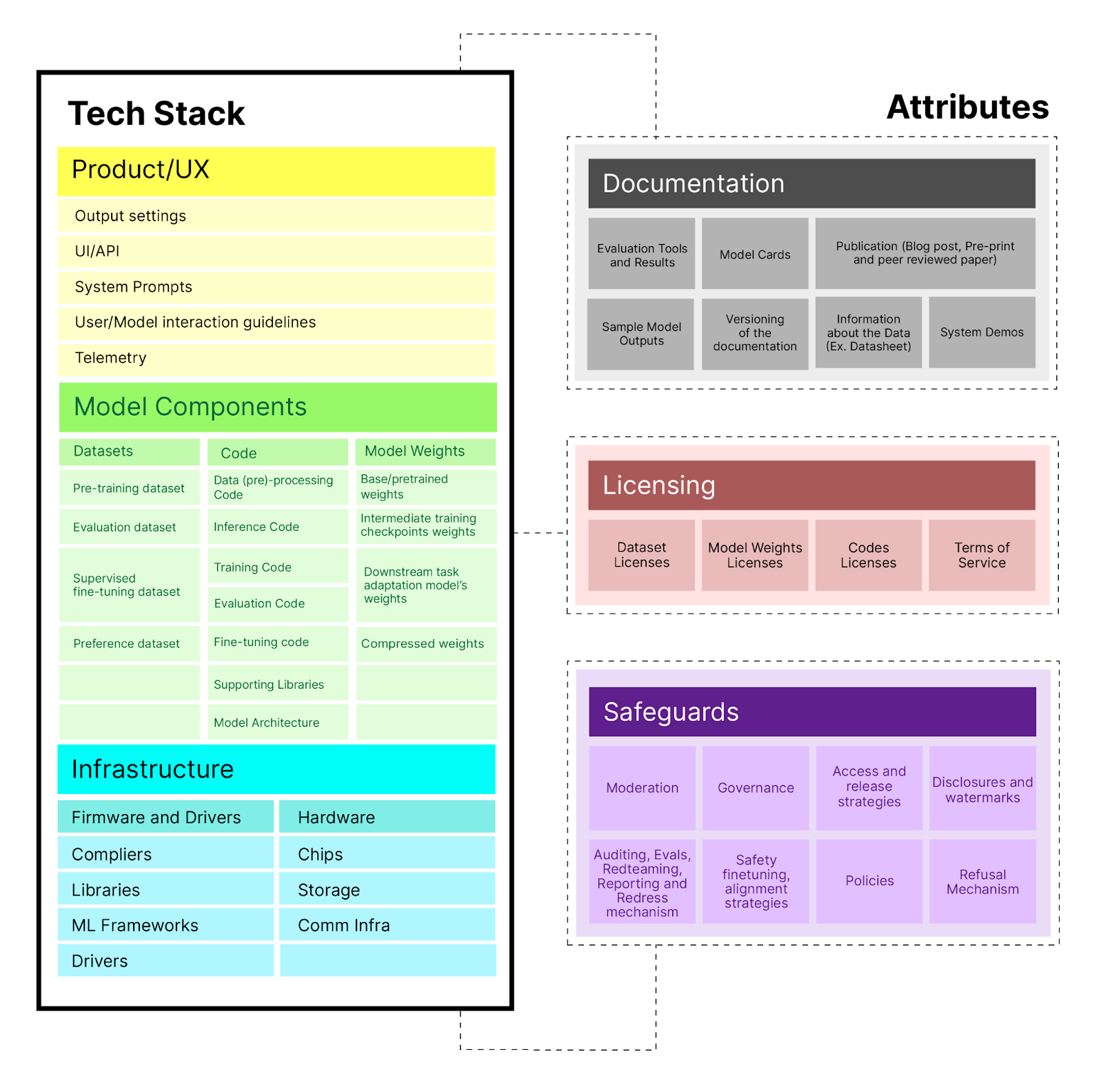

🚨: This is an oversimplified leaderboard, there are so much more other than the weights, training data and license for a model such as the code to train the model, the model architecture, data preprocessing code etc. Please refer to the MOF paper to learn more. Future updates will include a more detailed leaderboard with more models and components added. The purpose of this leaderboard is to let the world know that there are certain models claiming to be "open" despite falling short of openness standards (also known as open washing) due to restricted access to training data, training code, the use of custom licenses etc. Transparency and accurate representation of AI models openness are crucial.

image source: https://arxiv.org/abs/2405.15802